Imagine you’re a doctor using a complex AI model to diagnose a patient. The model predicts a serious illness, but you’re left wondering: “Why?” That’s the core issue with many powerful machine learning models – they’re black boxes, offering predictions without revealing their reasoning. This lack of transparency can be daunting, especially in critical domains like healthcare, finance, and law. This is where interpretable machine learning comes in, providing insights into the decision-making process of these models, giving you a peek inside the black box.

Image: www.researchgate.net

This guide will explore the exciting world of interpretable machine learning, focusing on how Python empowers you to understand and interpret your models. We’ll dive into the key concepts, techniques, and practical examples using Python, ultimately leading you to discover free PDF downloads for further exploration.

What is Interpretable Machine Learning?

Interpretable machine learning, often called “explainable AI” (XAI), focuses on building models that are not only accurate but also transparent and easy to understand. It’s like having a “why” button for your predictions, giving you the rationale behind the model’s output. This transparency is crucial for:

- Building trust: When users understand how a model works, they’re more likely to trust its decisions.

- Identifying biases: Transparency helps reveal biases within the model’s outputs, enabling data scientists to address them and create fair and ethical systems.

- Improving model performance: Understanding the reasoning behind predictions allows you to identify areas for improvement and enhance model accuracy.

Why Python for Interpretable ML?

Python’s popularity in machine learning stems from its vast libraries and intuitive syntax. But what makes it perfect for interpretable machine learning specifically?

- Rich Libraries: Python offers a plethora of libraries specifically designed for interpretable machine learning, such as SHAP, LIME, and ELI5. These libraries provide powerful tools for model visualization and explanation.

- Easy to Use: Python’s user-friendly nature makes it easy to implement and apply interpretability techniques. Even novice practitioners can quickly get started with these libraries.

Common Techniques for Interpretable Machine Learning

Interpretable machine learning isn’t a one-size-fits-all approach. A collection of techniques, each with its strengths, are used to analyze model behavior:

Image: awesomeopensource.com

1. Feature Importance

Feature importance techniques identify which input features have the most influence on the model’s predictions. This allows you to understand which factors are driving the model’s decisions.

2. Decision Trees and Rule-Based Models

Decision trees and rule-based models are inherently interpretable due to their tree-like structure. Each decision node in the tree represents a specific feature, and the branches represent different possible values. These models allow for easy visualization and comprehension of the decision-making process.

3. Local Interpretable Model-Agnostic Explanations (LIME)

LIME explains individual predictions by approximating the complex model with a simpler, more interpretable model locally around the input data point being analyzed. This local explanation provides insight into the model’s behavior for specific instances.

4. Shapley Additive Explanations (SHAP)

SHAP is a more game-theoretic approach that assigns “contributions” to each feature, explaining how much each feature “contributed” to the model’s output. This method offers a comprehensive view of the model’s predictions.

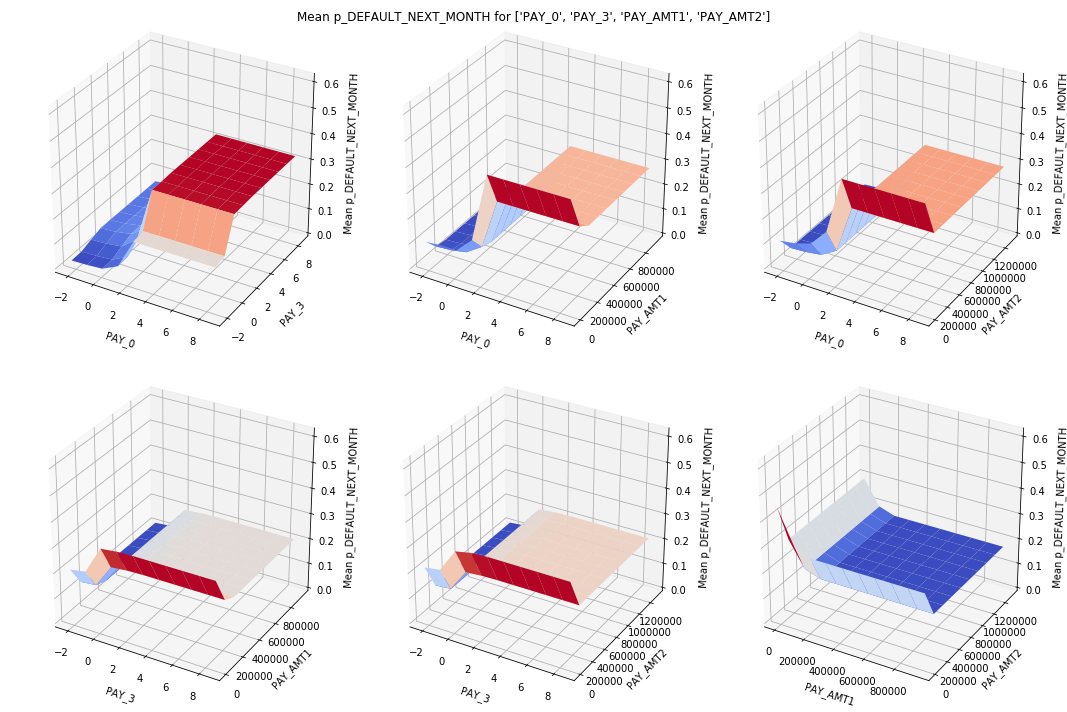

5. Partial Dependence Plots (PDP)

PDPs visualize the effect of a feature on the model’s predictions while holding other features constant. This helps understand how changes in a specific feature influence the output, potentially revealing non-linear relationships.

Interpretable Machine Learning in Action: Python Examples

Let’s dive into some practical examples of how to use Python for interpretable machine learning. These examples demonstrate the techniques discussed above, showcasing how to use these libraries in a real-world context.

Example 1: Feature Importance using Scikit-learn

In this example, we’ll use scikit-learn’s Decision Tree Classifier to train a model and then extract feature importance information using the feature_importances_ attribute:

from sklearn.tree import DecisionTreeClassifier

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

# Load the Iris dataset

iris = load_iris()

X = iris.data

y = iris.target

# Split the data into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2)

# Train a decision tree classifier

dtc = DecisionTreeClassifier()

dtc.fit(X_train, y_train)

# Get feature importances

feature_importances = dtc.feature_importances_

# Print feature importances

print(feature_importances_)Example 2: LIME with the lime Library

This example demonstrates how to use the lime library to explain individual predictions. We will use the Iris dataset and a RandomForest Classifier. LIME focuses on understanding the model’s behavior for specific inputs.

from sklearn.ensemble import RandomForestClassifier

import lime

import lime.lime_tabular

# Load the Iris dataset

iris = load_iris()

X = iris.data

y = iris.target

# Split the data into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2)

# Train a random forest classifier

rfc = RandomForestClassifier()

rfc.fit(X_train, y_train)

# Create a LIME explainer

explainer = lime.lime_tabular.LimeTabularExplainer(

X_train,

feature_names=iris.feature_names,

class_names=iris.target_names

)

# Select a data point to explain

index = 10

instance = X_test[index]

# Explain the prediction

exp = explainer.explain_instance(

instance,

rfc.predict_proba,

num_features=4,

top_labels=1

)

# Show the explanation

exp.show_in_notebook()Example 3: SHAP Values with the shap Library

This example showcases how the shap library can be used to calculate SHAP values, which offer detailed insights into each feature’s “contribution” to the prediction. For this example, we’ll build a Logistic Regression model and use it to predict customer churn.

import shap

from sklearn.linear_model import LogisticRegression

import pandas as pd

# Load the churn dataset

data = pd.read_csv('telecom_churn.csv') # Replace with your dataset

# Prepare features and target variable

X = data.drop('Churn', axis=1) # Adjust column names based on your dataset

y = data['Churn']

# Split the data into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2)

# Train a logistic regression model

lr = LogisticRegression()

lr.fit(X_train, y_train)

# Create a SHAP explainer

explainer = shap.LinearExplainer(lr, X_train)

# Calculate SHAP values for the test set

shap_values = explainer.shap_values(X_test)

# Visualize the SHAP values to see feature contributions

shap.summary_plot(shap_values, X_test)Resources and Free PDF Downloads

Want to learn more about interpretable machine learning and explore these techniques in greater depth? Here are some excellent resources:

Books:

- Interpretable Machine Learning: A Guide for Making Black Box Models Explainable by Christoph Molnar

- Explainable AI: Interpreting, Explaining and Visualizing Machine Learning by W. James Murdoch, Chandan Singh, et al.

Free PDF Downloads:

You can find free PDF download resources available online, which offer valuable guides and tutorials. A quick search on platforms like Google Scholar, ResearchGate, and arXiv with keywords like “interpretable machine learning python pdf” can lead you to relevant material.

Tips and Expert Advice

Here are some tips to get you started on your interpretable machine learning journey:

- Start Simple: Begin with techniques like feature importance and decision trees. They are easier to understand and can provide valuable insights.

- Visualize Data: Use visualizations like partial dependence plots and SHAP summary plots to gain deeper understanding of your model’s behavior.

- Be Consistent: Apply interpretability techniques throughout your machine learning pipeline, from data preprocessing to model evaluation, to ensure transparency from start to finish.

- Domain Expertise: Combine interpretable machine learning with domain-specific knowledge to interpret model results effectively.

Always remember that interpretability is an ongoing process, requiring constant evaluation and reassessment. As new techniques and approaches emerge, stay updated to leverage the latest advancements.

FAQ

Q: What are the limitations of interpretable machine learning?

While powerful, interpretable machine learning also has limitations. Some techniques can be complex to implement, and there’s no single “best” method for all situations. Additionally, interpretability often comes at the cost of model performance, meaning a more interpretable model may be slightly less accurate. Finding the right balance between interpretability and performance is crucial for practical applications.

Q: How can I choose the right interpretability technique for my project?

The choice of technique depends on your goal, the type of model you’re using, and the complexity of the problem. For simple models like decision trees, basic feature importance may suffice. For complex models, techniques like LIME or SHAP may be more effective. Analyze your project’s specifics and select the technique that best addresses your interpretability needs.

Interpretable Machine Learning With Python Pdf Free Download

Conclusion

Interpretable machine learning is a rapidly growing field, empowering us to build more transparent and explainable AI systems. Python, with its abundant libraries and resources, is a powerful tool for exploring interpretability techniques.

Are you ready to dive deeper into interpretable machine learning with Python? Share your thoughts and any questions you might have in the comments below. Let’s discuss how to create AI solutions that are both powerful and understandable.